注意

前往結尾以下載完整的範例程式碼。或透過 JupyterLite 或 Binder 在您的瀏覽器中執行此範例

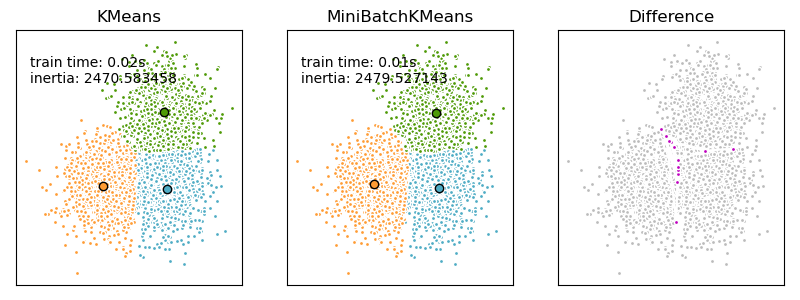

K-Means 和 MiniBatchKMeans 聚類演算法的比較#

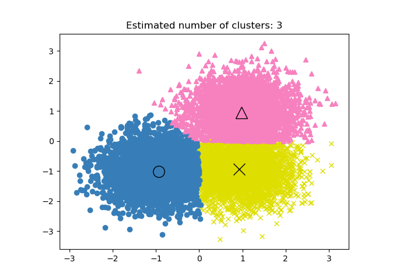

我們想要比較 MiniBatchKMeans 和 KMeans 的效能:MiniBatchKMeans 速度更快,但結果略有不同(請參閱Mini Batch K-Means)。

我們將對一組資料進行聚類,先使用 KMeans,然後使用 MiniBatchKMeans,並繪製結果。我們還將繪製兩個演算法之間標籤不同的點。

# Authors: The scikit-learn developers

# SPDX-License-Identifier: BSD-3-Clause

產生資料#

我們首先產生要聚類的資料 blob。

import numpy as np

from sklearn.datasets import make_blobs

np.random.seed(0)

batch_size = 45

centers = [[1, 1], [-1, -1], [1, -1]]

n_clusters = len(centers)

X, labels_true = make_blobs(n_samples=3000, centers=centers, cluster_std=0.7)

使用 KMeans 計算聚類#

使用 MiniBatchKMeans 計算聚類#

from sklearn.cluster import MiniBatchKMeans

mbk = MiniBatchKMeans(

init="k-means++",

n_clusters=3,

batch_size=batch_size,

n_init=10,

max_no_improvement=10,

verbose=0,

)

t0 = time.time()

mbk.fit(X)

t_mini_batch = time.time() - t0

建立聚類之間的同等性#

我們希望 MiniBatchKMeans 和 KMeans 演算法的相同聚類具有相同的顏色。讓我們將聚類中心按最接近的聚類中心配對。

from sklearn.metrics.pairwise import pairwise_distances_argmin

k_means_cluster_centers = k_means.cluster_centers_

order = pairwise_distances_argmin(k_means.cluster_centers_, mbk.cluster_centers_)

mbk_means_cluster_centers = mbk.cluster_centers_[order]

k_means_labels = pairwise_distances_argmin(X, k_means_cluster_centers)

mbk_means_labels = pairwise_distances_argmin(X, mbk_means_cluster_centers)

繪製結果#

import matplotlib.pyplot as plt

fig = plt.figure(figsize=(8, 3))

fig.subplots_adjust(left=0.02, right=0.98, bottom=0.05, top=0.9)

colors = ["#4EACC5", "#FF9C34", "#4E9A06"]

# KMeans

ax = fig.add_subplot(1, 3, 1)

for k, col in zip(range(n_clusters), colors):

my_members = k_means_labels == k

cluster_center = k_means_cluster_centers[k]

ax.plot(X[my_members, 0], X[my_members, 1], "w", markerfacecolor=col, marker=".")

ax.plot(

cluster_center[0],

cluster_center[1],

"o",

markerfacecolor=col,

markeredgecolor="k",

markersize=6,

)

ax.set_title("KMeans")

ax.set_xticks(())

ax.set_yticks(())

plt.text(-3.5, 1.8, "train time: %.2fs\ninertia: %f" % (t_batch, k_means.inertia_))

# MiniBatchKMeans

ax = fig.add_subplot(1, 3, 2)

for k, col in zip(range(n_clusters), colors):

my_members = mbk_means_labels == k

cluster_center = mbk_means_cluster_centers[k]

ax.plot(X[my_members, 0], X[my_members, 1], "w", markerfacecolor=col, marker=".")

ax.plot(

cluster_center[0],

cluster_center[1],

"o",

markerfacecolor=col,

markeredgecolor="k",

markersize=6,

)

ax.set_title("MiniBatchKMeans")

ax.set_xticks(())

ax.set_yticks(())

plt.text(-3.5, 1.8, "train time: %.2fs\ninertia: %f" % (t_mini_batch, mbk.inertia_))

# Initialize the different array to all False

different = mbk_means_labels == 4

ax = fig.add_subplot(1, 3, 3)

for k in range(n_clusters):

different += (k_means_labels == k) != (mbk_means_labels == k)

identical = np.logical_not(different)

ax.plot(X[identical, 0], X[identical, 1], "w", markerfacecolor="#bbbbbb", marker=".")

ax.plot(X[different, 0], X[different, 1], "w", markerfacecolor="m", marker=".")

ax.set_title("Difference")

ax.set_xticks(())

ax.set_yticks(())

plt.show()

腳本的總執行時間: (0 分鐘 0.210 秒)

相關範例