注意

前往結尾以下載完整的範例程式碼。或透過 JupyterLite 或 Binder 在您的瀏覽器中執行此範例

具有交叉驗證的網格搜尋的自訂重新擬合策略#

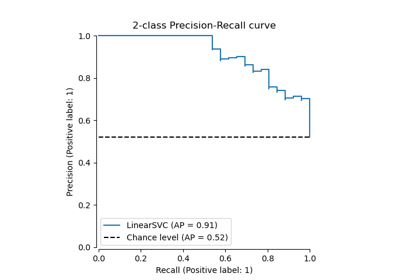

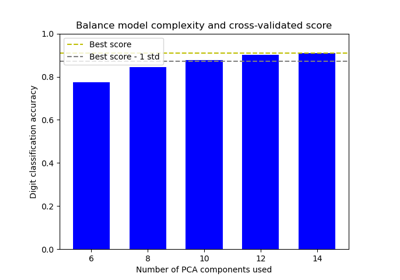

此範例示範如何透過交叉驗證最佳化分類器,這是使用 GridSearchCV 物件在僅包含一半可用標記數據的開發集上完成的。

然後,在模型選擇步驟中未使用的專用評估集上,測量選定的超參數和訓練模型的效能。

有關可用於模型選擇的工具的更多詳細資訊,請參閱關於交叉驗證:評估估計器效能 和 調整估計器的超參數 的章節。

# Authors: The scikit-learn developers

# SPDX-License-Identifier: BSD-3-Clause

資料集#

我們將使用 digits 數據集。目標是對手寫數字圖像進行分類。我們將問題轉換為二元分類,以便更容易理解:目標是識別數字是否為 8。

from sklearn import datasets

digits = datasets.load_digits()

為了在圖像上訓練分類器,我們需要將它們展平為向量。每個 8 x 8 像素的圖像都需要轉換為 64 像素的向量。因此,我們將獲得一個形狀為 (n_images, n_pixels) 的最終數據陣列。

n_samples = len(digits.images)

X = digits.images.reshape((n_samples, -1))

y = digits.target == 8

print(

f"The number of images is {X.shape[0]} and each image contains {X.shape[1]} pixels"

)

The number of images is 1797 and each image contains 64 pixels

如引言中所述,數據將被分成大小相等的訓練集和測試集。

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.5, random_state=0)

定義我們的網格搜尋策略#

我們將透過在訓練集的折疊上搜尋最佳超參數來選擇分類器。為此,我們需要定義選擇最佳候選者的分數。

scores = ["precision", "recall"]

我們還可以定義一個函數,以傳遞到 GridSearchCV 實例的 refit 參數。它將實作自訂策略,以從 GridSearchCV 的 cv_results_ 屬性中選擇最佳候選者。選定候選者後,GridSearchCV 實例會自動重新擬合。

此處的策略是將在精確度和召回率方面最佳的模型列入候選名單。從選定的模型中,我們最終選擇預測速度最快的模型。請注意,這些自訂選擇完全是任意的。

import pandas as pd

def print_dataframe(filtered_cv_results):

"""Pretty print for filtered dataframe"""

for mean_precision, std_precision, mean_recall, std_recall, params in zip(

filtered_cv_results["mean_test_precision"],

filtered_cv_results["std_test_precision"],

filtered_cv_results["mean_test_recall"],

filtered_cv_results["std_test_recall"],

filtered_cv_results["params"],

):

print(

f"precision: {mean_precision:0.3f} (±{std_precision:0.03f}),"

f" recall: {mean_recall:0.3f} (±{std_recall:0.03f}),"

f" for {params}"

)

print()

def refit_strategy(cv_results):

"""Define the strategy to select the best estimator.

The strategy defined here is to filter-out all results below a precision threshold

of 0.98, rank the remaining by recall and keep all models with one standard

deviation of the best by recall. Once these models are selected, we can select the

fastest model to predict.

Parameters

----------

cv_results : dict of numpy (masked) ndarrays

CV results as returned by the `GridSearchCV`.

Returns

-------

best_index : int

The index of the best estimator as it appears in `cv_results`.

"""

# print the info about the grid-search for the different scores

precision_threshold = 0.98

cv_results_ = pd.DataFrame(cv_results)

print("All grid-search results:")

print_dataframe(cv_results_)

# Filter-out all results below the threshold

high_precision_cv_results = cv_results_[

cv_results_["mean_test_precision"] > precision_threshold

]

print(f"Models with a precision higher than {precision_threshold}:")

print_dataframe(high_precision_cv_results)

high_precision_cv_results = high_precision_cv_results[

[

"mean_score_time",

"mean_test_recall",

"std_test_recall",

"mean_test_precision",

"std_test_precision",

"rank_test_recall",

"rank_test_precision",

"params",

]

]

# Select the most performant models in terms of recall

# (within 1 sigma from the best)

best_recall_std = high_precision_cv_results["mean_test_recall"].std()

best_recall = high_precision_cv_results["mean_test_recall"].max()

best_recall_threshold = best_recall - best_recall_std

high_recall_cv_results = high_precision_cv_results[

high_precision_cv_results["mean_test_recall"] > best_recall_threshold

]

print(

"Out of the previously selected high precision models, we keep all the\n"

"the models within one standard deviation of the highest recall model:"

)

print_dataframe(high_recall_cv_results)

# From the best candidates, select the fastest model to predict

fastest_top_recall_high_precision_index = high_recall_cv_results[

"mean_score_time"

].idxmin()

print(

"\nThe selected final model is the fastest to predict out of the previously\n"

"selected subset of best models based on precision and recall.\n"

"Its scoring time is:\n\n"

f"{high_recall_cv_results.loc[fastest_top_recall_high_precision_index]}"

)

return fastest_top_recall_high_precision_index

調整超參數#

一旦我們定義了選擇最佳模型的策略,我們就定義超參數的值並建立網格搜尋實例

from sklearn.model_selection import GridSearchCV

from sklearn.svm import SVC

tuned_parameters = [

{"kernel": ["rbf"], "gamma": [1e-3, 1e-4], "C": [1, 10, 100, 1000]},

{"kernel": ["linear"], "C": [1, 10, 100, 1000]},

]

grid_search = GridSearchCV(

SVC(), tuned_parameters, scoring=scores, refit=refit_strategy

)

grid_search.fit(X_train, y_train)

All grid-search results:

precision: 1.000 (±0.000), recall: 0.854 (±0.063), for {'C': 1, 'gamma': 0.001, 'kernel': 'rbf'}

precision: 1.000 (±0.000), recall: 0.257 (±0.061), for {'C': 1, 'gamma': 0.0001, 'kernel': 'rbf'}

precision: 1.000 (±0.000), recall: 0.877 (±0.069), for {'C': 10, 'gamma': 0.001, 'kernel': 'rbf'}

precision: 0.968 (±0.039), recall: 0.780 (±0.083), for {'C': 10, 'gamma': 0.0001, 'kernel': 'rbf'}

precision: 1.000 (±0.000), recall: 0.877 (±0.069), for {'C': 100, 'gamma': 0.001, 'kernel': 'rbf'}

precision: 0.905 (±0.058), recall: 0.889 (±0.074), for {'C': 100, 'gamma': 0.0001, 'kernel': 'rbf'}

precision: 1.000 (±0.000), recall: 0.877 (±0.069), for {'C': 1000, 'gamma': 0.001, 'kernel': 'rbf'}

precision: 0.904 (±0.058), recall: 0.890 (±0.073), for {'C': 1000, 'gamma': 0.0001, 'kernel': 'rbf'}

precision: 0.695 (±0.073), recall: 0.743 (±0.065), for {'C': 1, 'kernel': 'linear'}

precision: 0.643 (±0.066), recall: 0.757 (±0.066), for {'C': 10, 'kernel': 'linear'}

precision: 0.611 (±0.028), recall: 0.744 (±0.044), for {'C': 100, 'kernel': 'linear'}

precision: 0.618 (±0.039), recall: 0.744 (±0.044), for {'C': 1000, 'kernel': 'linear'}

Models with a precision higher than 0.98:

precision: 1.000 (±0.000), recall: 0.854 (±0.063), for {'C': 1, 'gamma': 0.001, 'kernel': 'rbf'}

precision: 1.000 (±0.000), recall: 0.257 (±0.061), for {'C': 1, 'gamma': 0.0001, 'kernel': 'rbf'}

precision: 1.000 (±0.000), recall: 0.877 (±0.069), for {'C': 10, 'gamma': 0.001, 'kernel': 'rbf'}

precision: 1.000 (±0.000), recall: 0.877 (±0.069), for {'C': 100, 'gamma': 0.001, 'kernel': 'rbf'}

precision: 1.000 (±0.000), recall: 0.877 (±0.069), for {'C': 1000, 'gamma': 0.001, 'kernel': 'rbf'}

Out of the previously selected high precision models, we keep all the

the models within one standard deviation of the highest recall model:

precision: 1.000 (±0.000), recall: 0.854 (±0.063), for {'C': 1, 'gamma': 0.001, 'kernel': 'rbf'}

precision: 1.000 (±0.000), recall: 0.877 (±0.069), for {'C': 10, 'gamma': 0.001, 'kernel': 'rbf'}

precision: 1.000 (±0.000), recall: 0.877 (±0.069), for {'C': 100, 'gamma': 0.001, 'kernel': 'rbf'}

precision: 1.000 (±0.000), recall: 0.877 (±0.069), for {'C': 1000, 'gamma': 0.001, 'kernel': 'rbf'}

The selected final model is the fastest to predict out of the previously

selected subset of best models based on precision and recall.

Its scoring time is:

mean_score_time 0.006393

mean_test_recall 0.853676

std_test_recall 0.063184

mean_test_precision 1.0

std_test_precision 0.0

rank_test_recall 6

rank_test_precision 1

params {'C': 1, 'gamma': 0.001, 'kernel': 'rbf'}

Name: 0, dtype: object

透過網格搜尋和我們的自訂策略選取的參數為

grid_search.best_params_

{'C': 1, 'gamma': 0.001, 'kernel': 'rbf'}

最後,我們在留出的評估集上評估微調後的模型:grid_search 物件已使用我們的自訂重新擬合策略選取的參數自動重新擬合到完整的訓練集。

我們可以使用分類報告來計算留出集上的標準分類指標

from sklearn.metrics import classification_report

y_pred = grid_search.predict(X_test)

print(classification_report(y_test, y_pred))

precision recall f1-score support

False 0.98 1.00 0.99 807

True 1.00 0.85 0.92 92

accuracy 0.98 899

macro avg 0.99 0.92 0.95 899

weighted avg 0.98 0.98 0.98 899

注意

問題太簡單了:超參數高原太過平坦,輸出模型在精確度和召回率方面質量相同,平分秋色。

腳本的總執行時間: (0 分鐘 10.408 秒)

相關範例