注意

跳至末尾下載完整範例程式碼。或透過 JupyterLite 或 Binder 在您的瀏覽器中執行此範例

歸納式聚類#

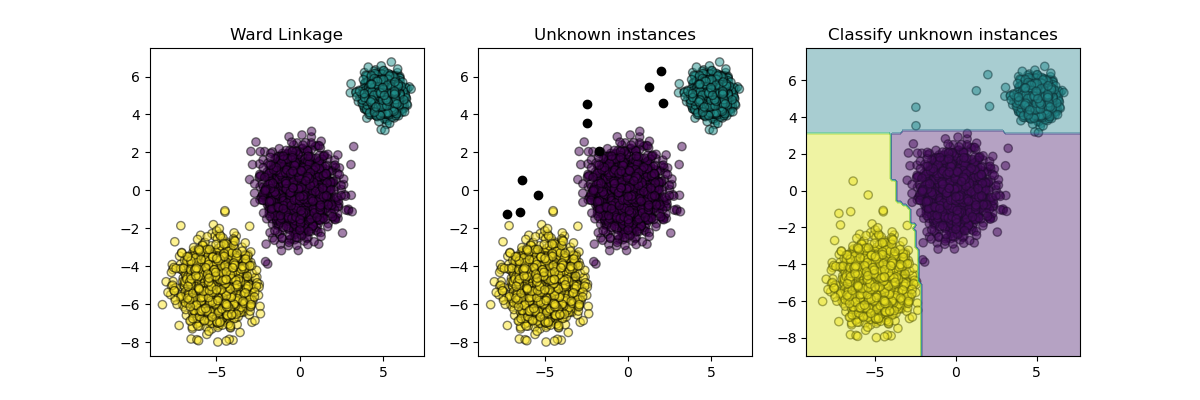

聚類可能很耗費資源,尤其當我們的資料集包含數百萬個資料點時。許多聚類演算法不是歸納式,因此無法直接應用於新的資料樣本,而不重新計算聚類,這可能難以處理。相反地,我們可以利用聚類來學習具有分類器的歸納式模型,這有幾個好處

它允許聚類擴展並應用於新資料

與將聚類重新擬合到新樣本不同,它確保標記程序在時間上是一致的

它允許我們使用分類器的推論能力來描述或解釋聚類

此範例說明了元估計器的通用實作,該估計器透過從聚類標籤誘導分類器來擴展聚類。

# Authors: The scikit-learn developers

# SPDX-License-Identifier: BSD-3-Clause

import matplotlib.pyplot as plt

from sklearn.base import BaseEstimator, clone

from sklearn.cluster import AgglomerativeClustering

from sklearn.datasets import make_blobs

from sklearn.ensemble import RandomForestClassifier

from sklearn.inspection import DecisionBoundaryDisplay

from sklearn.utils.metaestimators import available_if

from sklearn.utils.validation import check_is_fitted

N_SAMPLES = 5000

RANDOM_STATE = 42

def _classifier_has(attr):

"""Check if we can delegate a method to the underlying classifier.

First, we check the first fitted classifier if available, otherwise we

check the unfitted classifier.

"""

return lambda estimator: (

hasattr(estimator.classifier_, attr)

if hasattr(estimator, "classifier_")

else hasattr(estimator.classifier, attr)

)

class InductiveClusterer(BaseEstimator):

def __init__(self, clusterer, classifier):

self.clusterer = clusterer

self.classifier = classifier

def fit(self, X, y=None):

self.clusterer_ = clone(self.clusterer)

self.classifier_ = clone(self.classifier)

y = self.clusterer_.fit_predict(X)

self.classifier_.fit(X, y)

return self

@available_if(_classifier_has("predict"))

def predict(self, X):

check_is_fitted(self)

return self.classifier_.predict(X)

@available_if(_classifier_has("decision_function"))

def decision_function(self, X):

check_is_fitted(self)

return self.classifier_.decision_function(X)

def plot_scatter(X, color, alpha=0.5):

return plt.scatter(X[:, 0], X[:, 1], c=color, alpha=alpha, edgecolor="k")

# Generate some training data from clustering

X, y = make_blobs(

n_samples=N_SAMPLES,

cluster_std=[1.0, 1.0, 0.5],

centers=[(-5, -5), (0, 0), (5, 5)],

random_state=RANDOM_STATE,

)

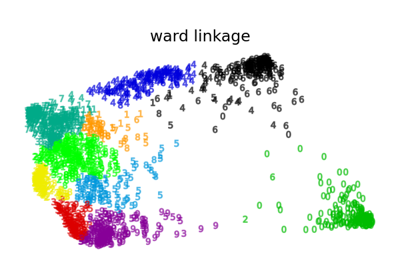

# Train a clustering algorithm on the training data and get the cluster labels

clusterer = AgglomerativeClustering(n_clusters=3)

cluster_labels = clusterer.fit_predict(X)

plt.figure(figsize=(12, 4))

plt.subplot(131)

plot_scatter(X, cluster_labels)

plt.title("Ward Linkage")

# Generate new samples and plot them along with the original dataset

X_new, y_new = make_blobs(

n_samples=10, centers=[(-7, -1), (-2, 4), (3, 6)], random_state=RANDOM_STATE

)

plt.subplot(132)

plot_scatter(X, cluster_labels)

plot_scatter(X_new, "black", 1)

plt.title("Unknown instances")

# Declare the inductive learning model that it will be used to

# predict cluster membership for unknown instances

classifier = RandomForestClassifier(random_state=RANDOM_STATE)

inductive_learner = InductiveClusterer(clusterer, classifier).fit(X)

probable_clusters = inductive_learner.predict(X_new)

ax = plt.subplot(133)

plot_scatter(X, cluster_labels)

plot_scatter(X_new, probable_clusters)

# Plotting decision regions

DecisionBoundaryDisplay.from_estimator(

inductive_learner, X, response_method="predict", alpha=0.4, ax=ax

)

plt.title("Classify unknown instances")

plt.show()

腳本的總執行時間:(0 分鐘 2.242 秒)

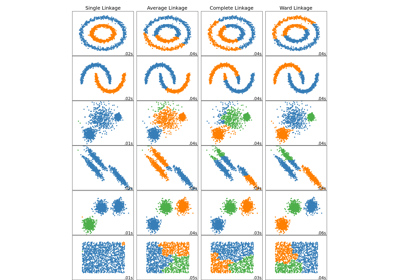

相關範例