注意

跳到結尾下載完整的範例程式碼。或透過 JupyterLite 或 Binder 在您的瀏覽器中執行此範例

使用非負矩陣分解和潛在狄利克雷分配的主題提取#

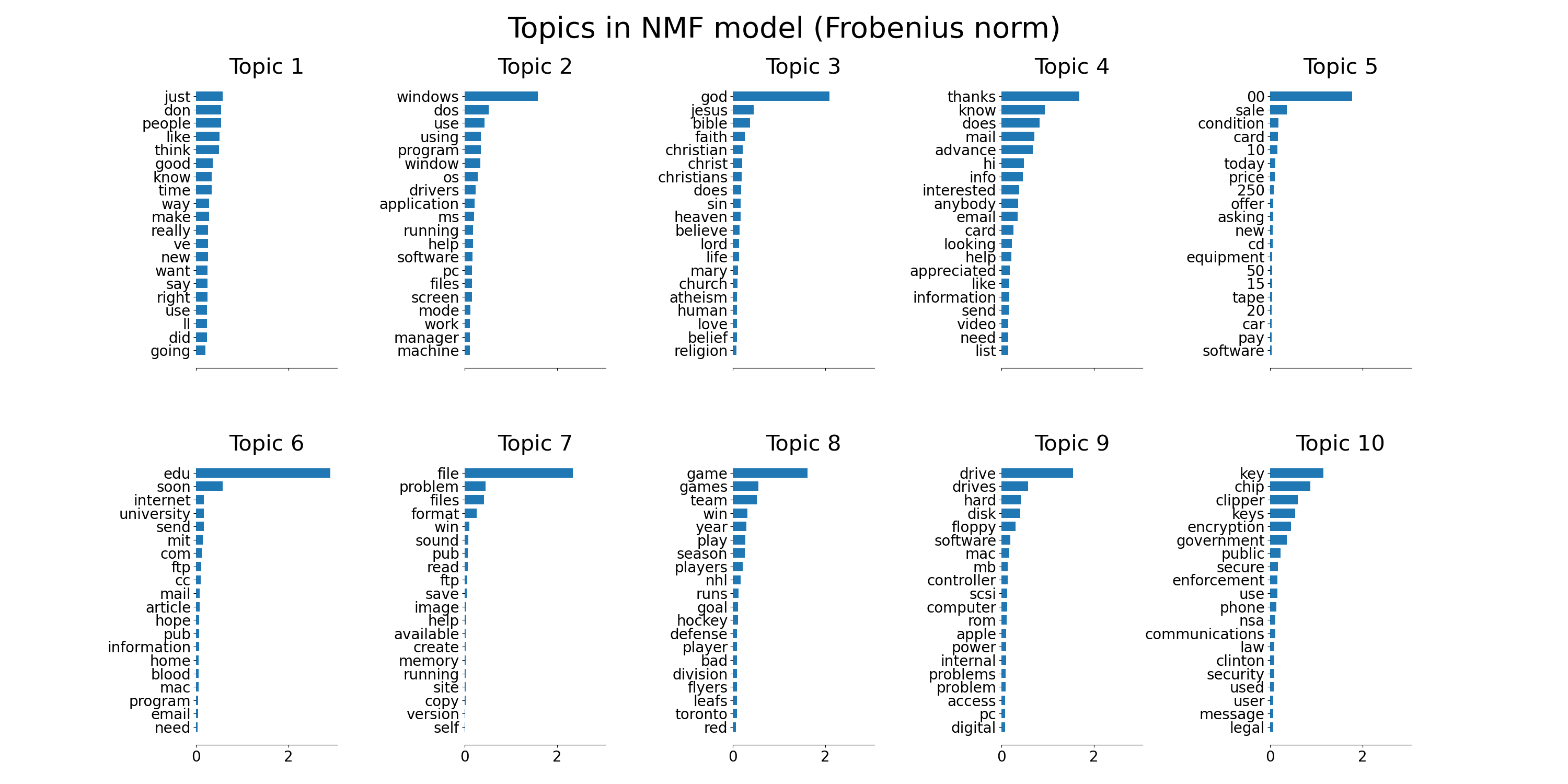

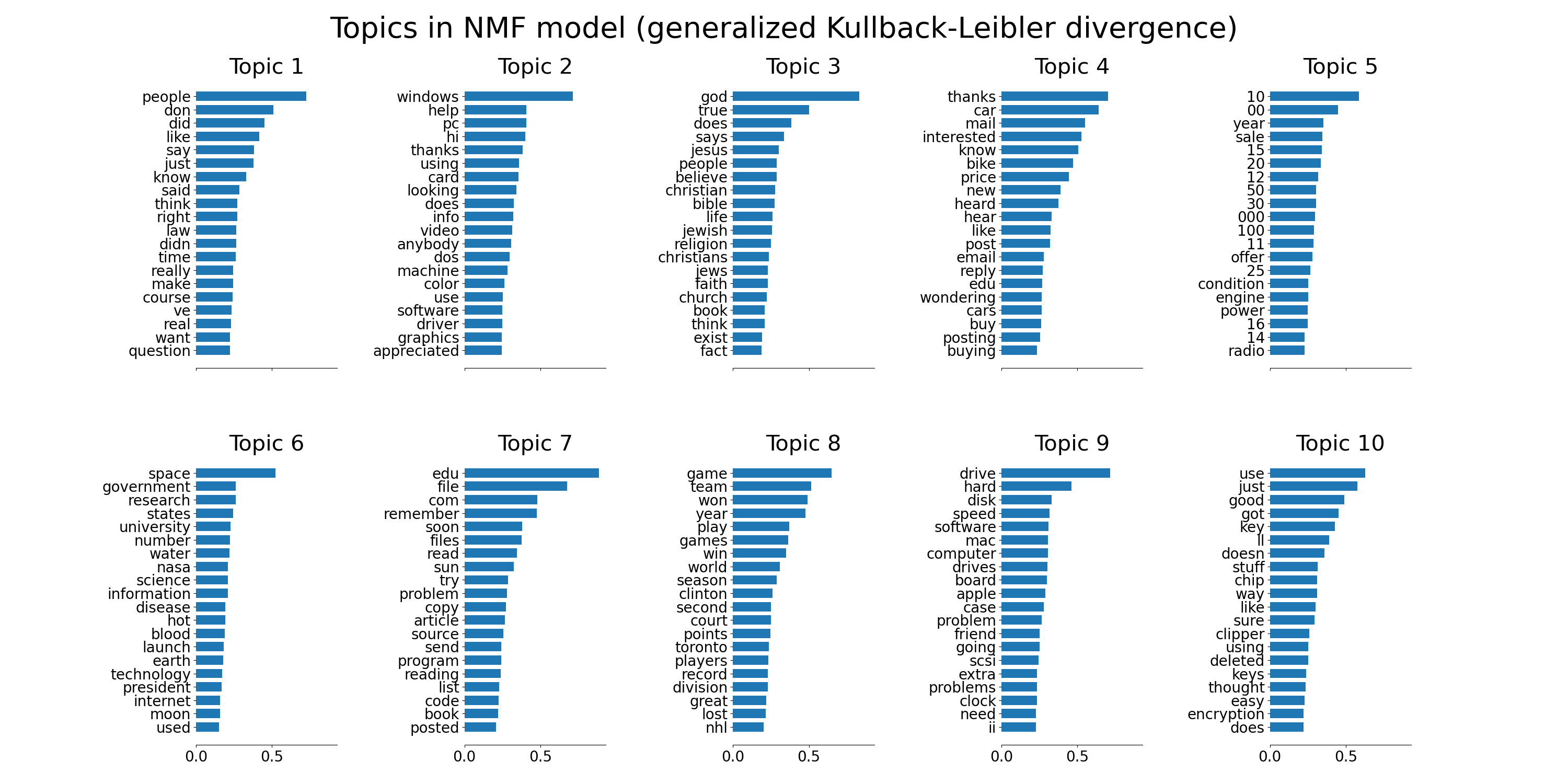

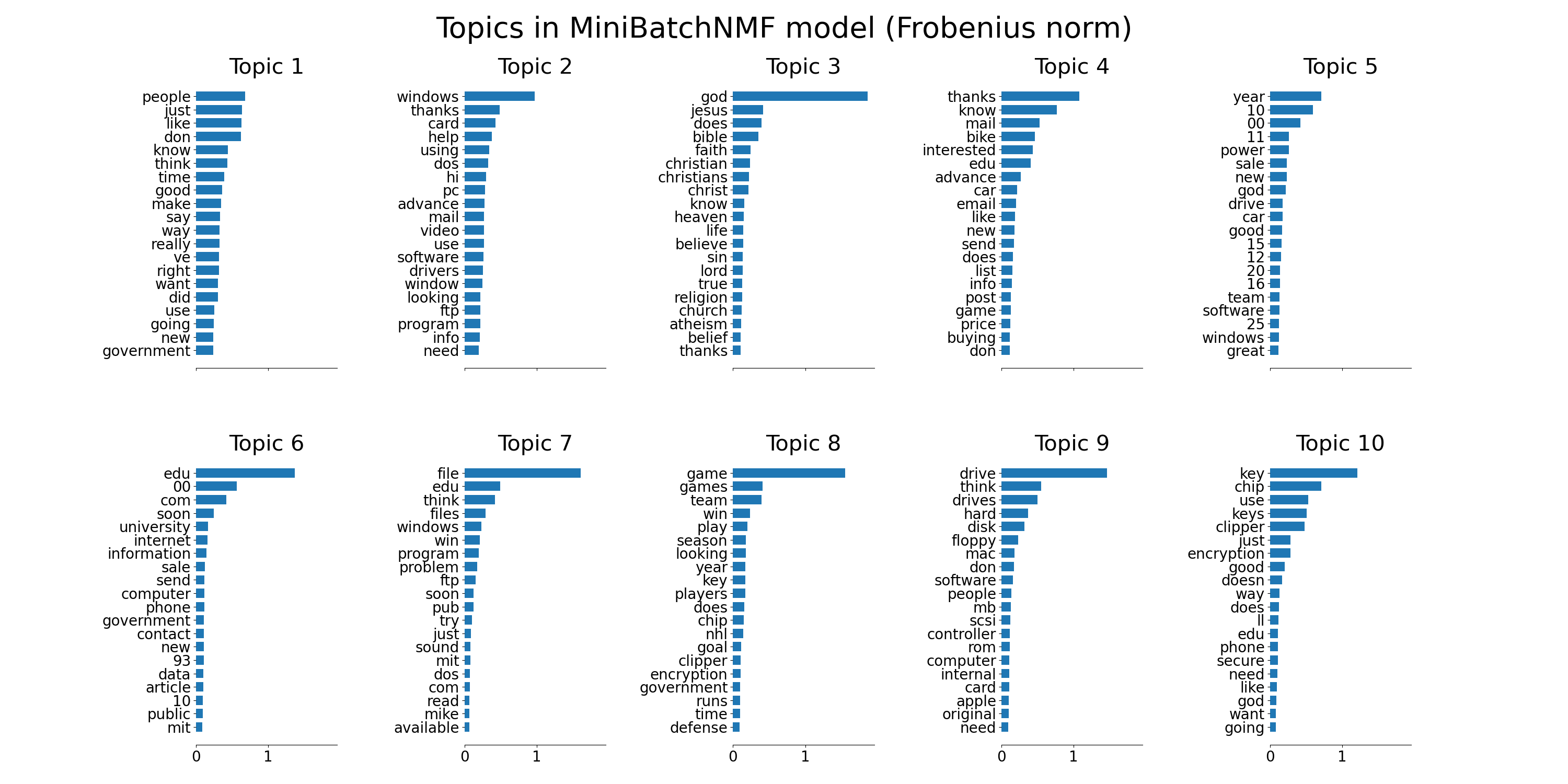

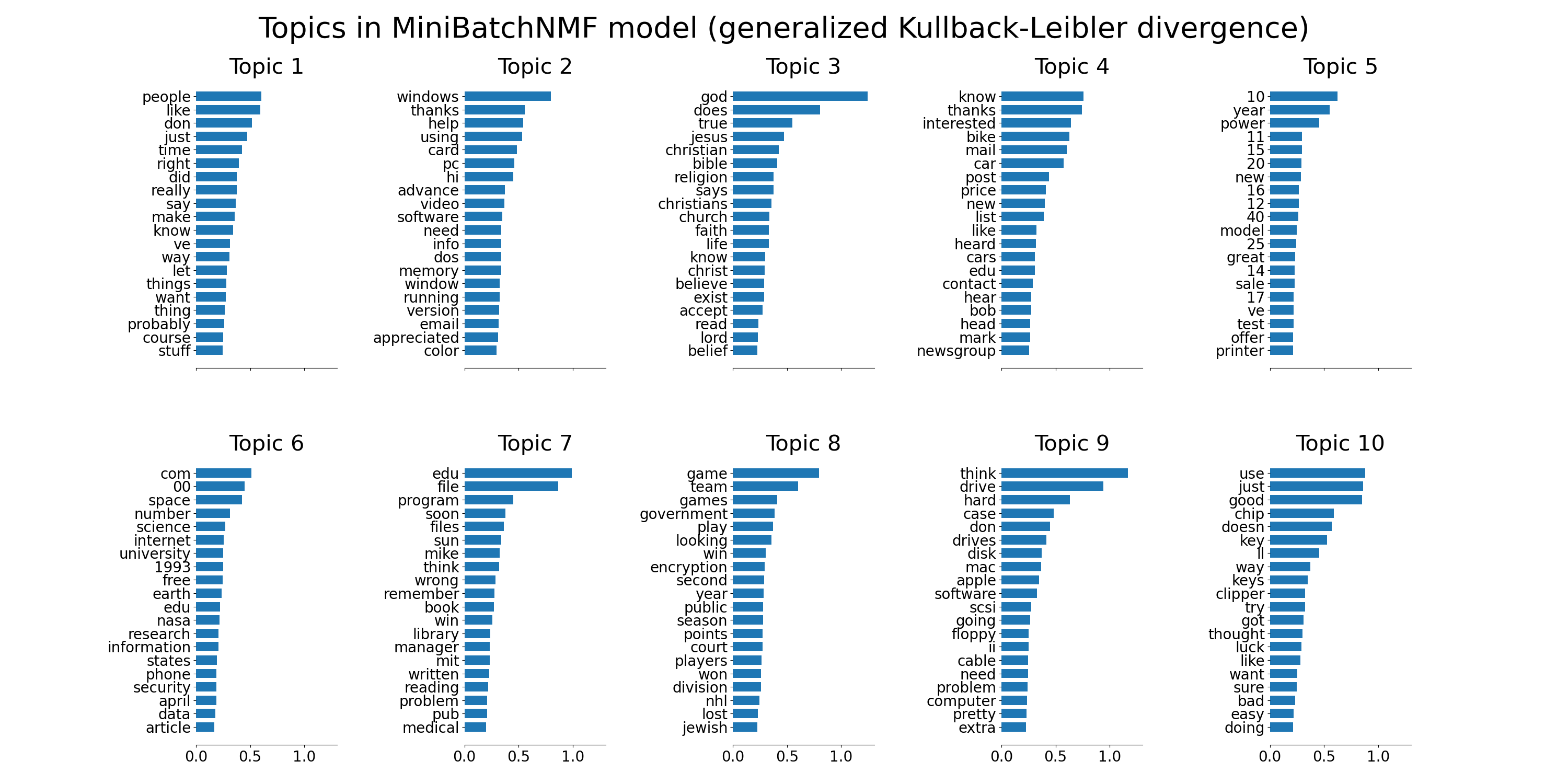

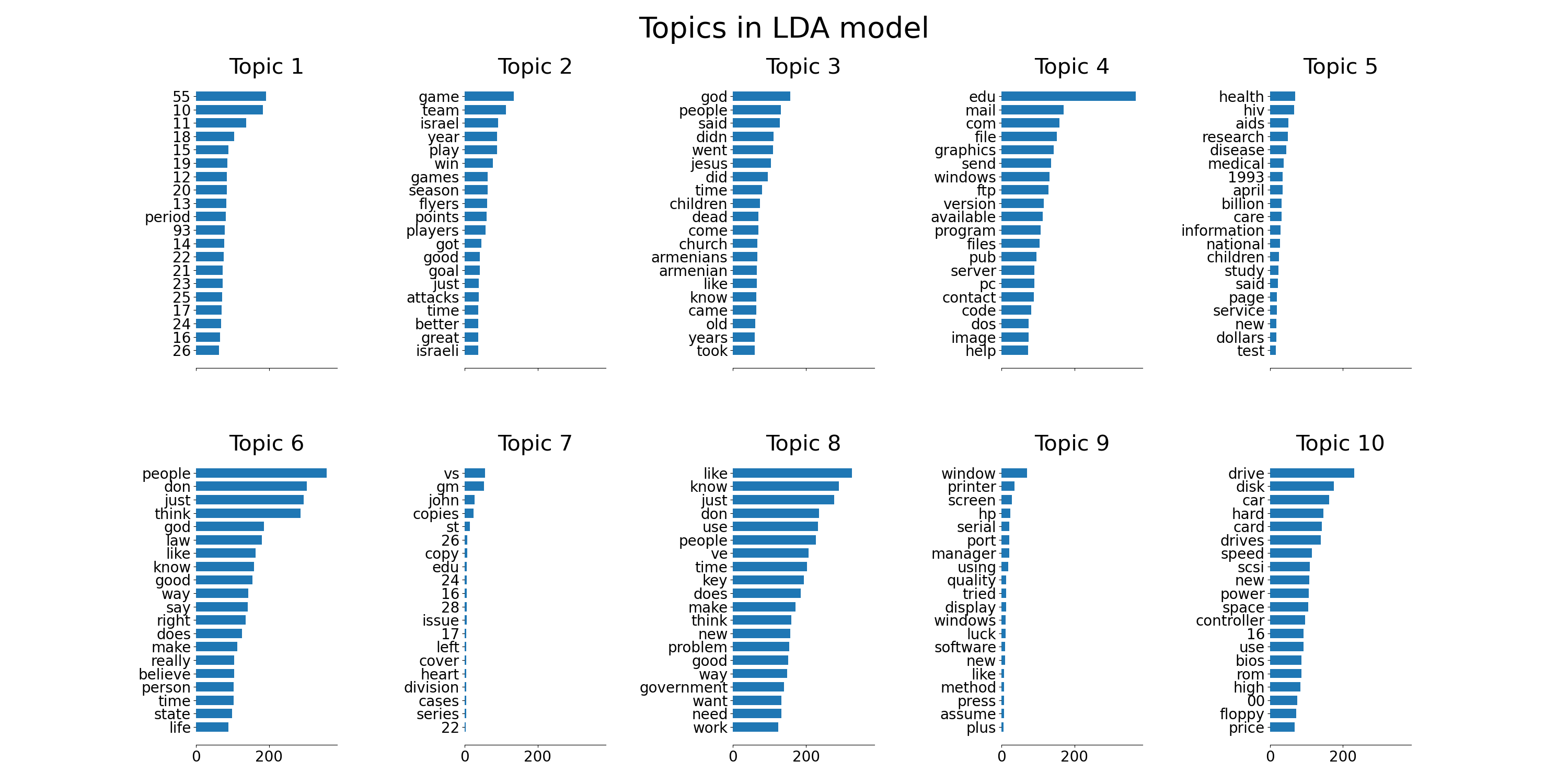

這是一個在文件語料庫上應用 NMF 和 LatentDirichletAllocation 的範例,並提取語料庫主題結構的加法模型。輸出是主題的繪圖,每個主題都使用基於權重的頂部幾個單字來表示為長條圖。

非負矩陣分解應用於兩個不同的目標函數:Frobenius 範數和廣義 Kullback-Leibler 散度。後者等效於機率潛在語義索引。

預設參數(n_samples / n_features / n_components)應使範例在幾十秒內可執行。您可以嘗試增加問題的維度,但請注意,時間複雜度在 NMF 中是多項式的。在 LDA 中,時間複雜度與(n_samples * 迭代次數)成正比。

Loading dataset...

done in 1.108s.

Extracting tf-idf features for NMF...

done in 0.322s.

Extracting tf features for LDA...

done in 0.301s.

Fitting the NMF model (Frobenius norm) with tf-idf features, n_samples=2000 and n_features=1000...

done in 0.079s.

Fitting the NMF model (generalized Kullback-Leibler divergence) with tf-idf features, n_samples=2000 and n_features=1000...

done in 1.357s.

Fitting the MiniBatchNMF model (Frobenius norm) with tf-idf features, n_samples=2000 and n_features=1000, batch_size=128...

done in 0.083s.

Fitting the MiniBatchNMF model (generalized Kullback-Leibler divergence) with tf-idf features, n_samples=2000 and n_features=1000, batch_size=128...

done in 0.221s.

Fitting LDA models with tf features, n_samples=2000 and n_features=1000...

done in 2.119s.

# Authors: The scikit-learn developers

# SPDX-License-Identifier: BSD-3-Clause

from time import time

import matplotlib.pyplot as plt

from sklearn.datasets import fetch_20newsgroups

from sklearn.decomposition import NMF, LatentDirichletAllocation, MiniBatchNMF

from sklearn.feature_extraction.text import CountVectorizer, TfidfVectorizer

n_samples = 2000

n_features = 1000

n_components = 10

n_top_words = 20

batch_size = 128

init = "nndsvda"

def plot_top_words(model, feature_names, n_top_words, title):

fig, axes = plt.subplots(2, 5, figsize=(30, 15), sharex=True)

axes = axes.flatten()

for topic_idx, topic in enumerate(model.components_):

top_features_ind = topic.argsort()[-n_top_words:]

top_features = feature_names[top_features_ind]

weights = topic[top_features_ind]

ax = axes[topic_idx]

ax.barh(top_features, weights, height=0.7)

ax.set_title(f"Topic {topic_idx +1}", fontdict={"fontsize": 30})

ax.tick_params(axis="both", which="major", labelsize=20)

for i in "top right left".split():

ax.spines[i].set_visible(False)

fig.suptitle(title, fontsize=40)

plt.subplots_adjust(top=0.90, bottom=0.05, wspace=0.90, hspace=0.3)

plt.show()

# Load the 20 newsgroups dataset and vectorize it. We use a few heuristics

# to filter out useless terms early on: the posts are stripped of headers,

# footers and quoted replies, and common English words, words occurring in

# only one document or in at least 95% of the documents are removed.

print("Loading dataset...")

t0 = time()

data, _ = fetch_20newsgroups(

shuffle=True,

random_state=1,

remove=("headers", "footers", "quotes"),

return_X_y=True,

)

data_samples = data[:n_samples]

print("done in %0.3fs." % (time() - t0))

# Use tf-idf features for NMF.

print("Extracting tf-idf features for NMF...")

tfidf_vectorizer = TfidfVectorizer(

max_df=0.95, min_df=2, max_features=n_features, stop_words="english"

)

t0 = time()

tfidf = tfidf_vectorizer.fit_transform(data_samples)

print("done in %0.3fs." % (time() - t0))

# Use tf (raw term count) features for LDA.

print("Extracting tf features for LDA...")

tf_vectorizer = CountVectorizer(

max_df=0.95, min_df=2, max_features=n_features, stop_words="english"

)

t0 = time()

tf = tf_vectorizer.fit_transform(data_samples)

print("done in %0.3fs." % (time() - t0))

print()

# Fit the NMF model

print(

"Fitting the NMF model (Frobenius norm) with tf-idf features, "

"n_samples=%d and n_features=%d..." % (n_samples, n_features)

)

t0 = time()

nmf = NMF(

n_components=n_components,

random_state=1,

init=init,

beta_loss="frobenius",

alpha_W=0.00005,

alpha_H=0.00005,

l1_ratio=1,

).fit(tfidf)

print("done in %0.3fs." % (time() - t0))

tfidf_feature_names = tfidf_vectorizer.get_feature_names_out()

plot_top_words(

nmf, tfidf_feature_names, n_top_words, "Topics in NMF model (Frobenius norm)"

)

# Fit the NMF model

print(

"\n" * 2,

"Fitting the NMF model (generalized Kullback-Leibler "

"divergence) with tf-idf features, n_samples=%d and n_features=%d..."

% (n_samples, n_features),

)

t0 = time()

nmf = NMF(

n_components=n_components,

random_state=1,

init=init,

beta_loss="kullback-leibler",

solver="mu",

max_iter=1000,

alpha_W=0.00005,

alpha_H=0.00005,

l1_ratio=0.5,

).fit(tfidf)

print("done in %0.3fs." % (time() - t0))

tfidf_feature_names = tfidf_vectorizer.get_feature_names_out()

plot_top_words(

nmf,

tfidf_feature_names,

n_top_words,

"Topics in NMF model (generalized Kullback-Leibler divergence)",

)

# Fit the MiniBatchNMF model

print(

"\n" * 2,

"Fitting the MiniBatchNMF model (Frobenius norm) with tf-idf "

"features, n_samples=%d and n_features=%d, batch_size=%d..."

% (n_samples, n_features, batch_size),

)

t0 = time()

mbnmf = MiniBatchNMF(

n_components=n_components,

random_state=1,

batch_size=batch_size,

init=init,

beta_loss="frobenius",

alpha_W=0.00005,

alpha_H=0.00005,

l1_ratio=0.5,

).fit(tfidf)

print("done in %0.3fs." % (time() - t0))

tfidf_feature_names = tfidf_vectorizer.get_feature_names_out()

plot_top_words(

mbnmf,

tfidf_feature_names,

n_top_words,

"Topics in MiniBatchNMF model (Frobenius norm)",

)

# Fit the MiniBatchNMF model

print(

"\n" * 2,

"Fitting the MiniBatchNMF model (generalized Kullback-Leibler "

"divergence) with tf-idf features, n_samples=%d and n_features=%d, "

"batch_size=%d..." % (n_samples, n_features, batch_size),

)

t0 = time()

mbnmf = MiniBatchNMF(

n_components=n_components,

random_state=1,

batch_size=batch_size,

init=init,

beta_loss="kullback-leibler",

alpha_W=0.00005,

alpha_H=0.00005,

l1_ratio=0.5,

).fit(tfidf)

print("done in %0.3fs." % (time() - t0))

tfidf_feature_names = tfidf_vectorizer.get_feature_names_out()

plot_top_words(

mbnmf,

tfidf_feature_names,

n_top_words,

"Topics in MiniBatchNMF model (generalized Kullback-Leibler divergence)",

)

print(

"\n" * 2,

"Fitting LDA models with tf features, n_samples=%d and n_features=%d..."

% (n_samples, n_features),

)

lda = LatentDirichletAllocation(

n_components=n_components,

max_iter=5,

learning_method="online",

learning_offset=50.0,

random_state=0,

)

t0 = time()

lda.fit(tf)

print("done in %0.3fs." % (time() - t0))

tf_feature_names = tf_vectorizer.get_feature_names_out()

plot_top_words(lda, tf_feature_names, n_top_words, "Topics in LDA model")

腳本總執行時間:(0 分鐘 11.286 秒)

相關範例