注意

跳到結尾下載完整的範例程式碼。 或通過 JupyterLite 或 Binder 在您的瀏覽器中執行此範例

隨機森林的袋外錯誤#

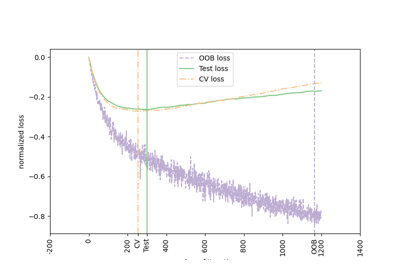

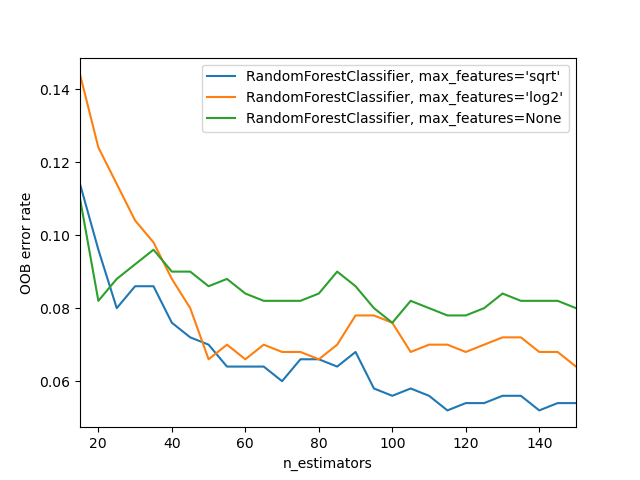

RandomForestClassifier 使用自舉聚合進行訓練,其中每棵新樹都是從訓練觀察的自舉樣本 \(z_i = (x_i, y_i)\) 中擬合的。袋外 (OOB) 錯誤是每個 \(z_i\) 的平均錯誤,使用各自自舉樣本中不包含 \(z_i\) 的樹的預測計算得出。這允許在訓練時擬合和驗證 RandomForestClassifier [1]。

下面的範例示範如何在訓練期間,在每次新增新樹時測量 OOB 錯誤。產生的圖表允許從業者近似一個合適的 n_estimators 值,在此值處錯誤會趨於穩定。

# Authors: The scikit-learn developers

# SPDX-License-Identifier: BSD-3-Clause

from collections import OrderedDict

import matplotlib.pyplot as plt

from sklearn.datasets import make_classification

from sklearn.ensemble import RandomForestClassifier

RANDOM_STATE = 123

# Generate a binary classification dataset.

X, y = make_classification(

n_samples=500,

n_features=25,

n_clusters_per_class=1,

n_informative=15,

random_state=RANDOM_STATE,

)

# NOTE: Setting the `warm_start` construction parameter to `True` disables

# support for parallelized ensembles but is necessary for tracking the OOB

# error trajectory during training.

ensemble_clfs = [

(

"RandomForestClassifier, max_features='sqrt'",

RandomForestClassifier(

warm_start=True,

oob_score=True,

max_features="sqrt",

random_state=RANDOM_STATE,

),

),

(

"RandomForestClassifier, max_features='log2'",

RandomForestClassifier(

warm_start=True,

max_features="log2",

oob_score=True,

random_state=RANDOM_STATE,

),

),

(

"RandomForestClassifier, max_features=None",

RandomForestClassifier(

warm_start=True,

max_features=None,

oob_score=True,

random_state=RANDOM_STATE,

),

),

]

# Map a classifier name to a list of (<n_estimators>, <error rate>) pairs.

error_rate = OrderedDict((label, []) for label, _ in ensemble_clfs)

# Range of `n_estimators` values to explore.

min_estimators = 15

max_estimators = 150

for label, clf in ensemble_clfs:

for i in range(min_estimators, max_estimators + 1, 5):

clf.set_params(n_estimators=i)

clf.fit(X, y)

# Record the OOB error for each `n_estimators=i` setting.

oob_error = 1 - clf.oob_score_

error_rate[label].append((i, oob_error))

# Generate the "OOB error rate" vs. "n_estimators" plot.

for label, clf_err in error_rate.items():

xs, ys = zip(*clf_err)

plt.plot(xs, ys, label=label)

plt.xlim(min_estimators, max_estimators)

plt.xlabel("n_estimators")

plt.ylabel("OOB error rate")

plt.legend(loc="upper right")

plt.show()

指令碼的總執行時間:(0 分鐘 4.478 秒)

相關範例