注意

前往結尾以下載完整的範例程式碼。或透過 JupyterLite 或 Binder 在您的瀏覽器中執行此範例

高斯混合模型橢圓體#

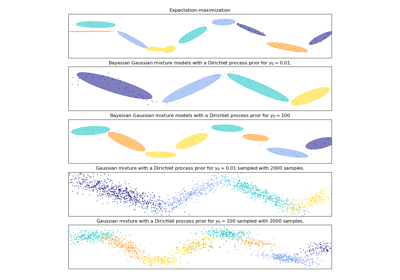

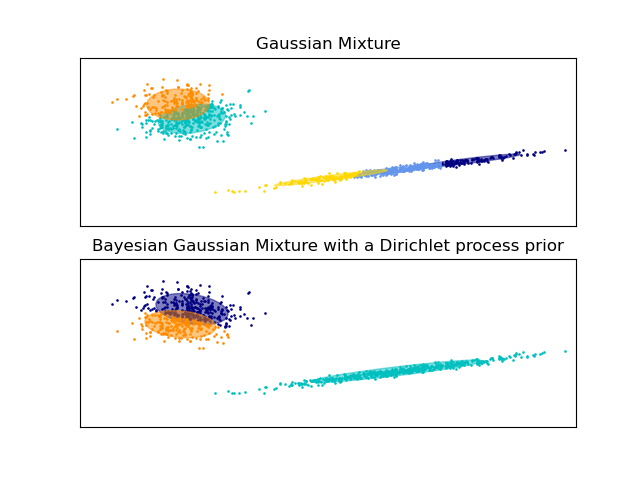

繪製透過期望最大化(GaussianMixture 類別)和變分推論(具有狄利克雷過程先驗的 BayesianGaussianMixture 類別模型)獲得的兩個高斯混合的信賴橢圓體。

這兩個模型都可以存取五個元件來擬合資料。請注意,期望最大化模型將必須使用所有五個元件,而變分推論模型實際上只會使用足夠好的擬合所需的元件數量。在這裡,我們可以看到期望最大化模型任意地分割某些元件,因為它試圖擬合太多元件,而狄利克雷過程模型則會自動調整其狀態數量。

這個範例沒有顯示出來,因為我們處於低維空間,但狄利克雷過程模型的另一個優點是,由於推論演算法的正規化特性,即使每個叢集的範例數量少於資料中的維度數量,它也能有效地擬合完整共變異數矩陣。

/home/circleci/project/sklearn/mixture/_base.py:269: ConvergenceWarning:

Best performing initialization did not converge. Try different init parameters, or increase max_iter, tol, or check for degenerate data.

# Authors: The scikit-learn developers

# SPDX-License-Identifier: BSD-3-Clause

import itertools

import matplotlib as mpl

import matplotlib.pyplot as plt

import numpy as np

from scipy import linalg

from sklearn import mixture

color_iter = itertools.cycle(["navy", "c", "cornflowerblue", "gold", "darkorange"])

def plot_results(X, Y_, means, covariances, index, title):

splot = plt.subplot(2, 1, 1 + index)

for i, (mean, covar, color) in enumerate(zip(means, covariances, color_iter)):

v, w = linalg.eigh(covar)

v = 2.0 * np.sqrt(2.0) * np.sqrt(v)

u = w[0] / linalg.norm(w[0])

# as the DP will not use every component it has access to

# unless it needs it, we shouldn't plot the redundant

# components.

if not np.any(Y_ == i):

continue

plt.scatter(X[Y_ == i, 0], X[Y_ == i, 1], 0.8, color=color)

# Plot an ellipse to show the Gaussian component

angle = np.arctan(u[1] / u[0])

angle = 180.0 * angle / np.pi # convert to degrees

ell = mpl.patches.Ellipse(mean, v[0], v[1], angle=180.0 + angle, color=color)

ell.set_clip_box(splot.bbox)

ell.set_alpha(0.5)

splot.add_artist(ell)

plt.xlim(-9.0, 5.0)

plt.ylim(-3.0, 6.0)

plt.xticks(())

plt.yticks(())

plt.title(title)

# Number of samples per component

n_samples = 500

# Generate random sample, two components

np.random.seed(0)

C = np.array([[0.0, -0.1], [1.7, 0.4]])

X = np.r_[

np.dot(np.random.randn(n_samples, 2), C),

0.7 * np.random.randn(n_samples, 2) + np.array([-6, 3]),

]

# Fit a Gaussian mixture with EM using five components

gmm = mixture.GaussianMixture(n_components=5, covariance_type="full").fit(X)

plot_results(X, gmm.predict(X), gmm.means_, gmm.covariances_, 0, "Gaussian Mixture")

# Fit a Dirichlet process Gaussian mixture using five components

dpgmm = mixture.BayesianGaussianMixture(n_components=5, covariance_type="full").fit(X)

plot_results(

X,

dpgmm.predict(X),

dpgmm.means_,

dpgmm.covariances_,

1,

"Bayesian Gaussian Mixture with a Dirichlet process prior",

)

plt.show()

腳本總執行時間: (0 分鐘 0.223 秒)

相關範例