注意

前往結尾以下載完整的範例程式碼。或透過 JupyterLite 或 Binder 在您的瀏覽器中執行此範例

具有異質資料來源的列轉換器#

資料集通常會包含需要不同特徵提取和處理流程的組件。當發生以下情況時,可能會發生這種情況:

您的資料集由異質資料類型組成(例如,點陣影像和文字標題),

您的資料集儲存在

pandas.DataFrame中,且不同的欄位需要不同的處理流程。

此範例展示如何在包含不同類型特徵的資料集上使用ColumnTransformer。特徵的選擇並非特別有用,但可用於說明此技術。

# Authors: The scikit-learn developers

# SPDX-License-Identifier: BSD-3-Clause

import numpy as np

from sklearn.compose import ColumnTransformer

from sklearn.datasets import fetch_20newsgroups

from sklearn.decomposition import PCA

from sklearn.feature_extraction import DictVectorizer

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.metrics import classification_report

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import FunctionTransformer

from sklearn.svm import LinearSVC

20 新聞群組資料集#

我們將使用20 新聞群組資料集,其中包含來自 20 個主題新聞群組的貼文。此資料集根據特定日期之前和之後發佈的訊息,分成訓練和測試子集。我們將只使用 2 個類別的貼文以加快執行時間。

categories = ["sci.med", "sci.space"]

X_train, y_train = fetch_20newsgroups(

random_state=1,

subset="train",

categories=categories,

remove=("footers", "quotes"),

return_X_y=True,

)

X_test, y_test = fetch_20newsgroups(

random_state=1,

subset="test",

categories=categories,

remove=("footers", "quotes"),

return_X_y=True,

)

每個特徵都包含有關該貼文的元資訊,例如主題和新聞貼文的本文。

print(X_train[0])

From: mccall@mksol.dseg.ti.com (fred j mccall 575-3539)

Subject: Re: Metric vs English

Article-I.D.: mksol.1993Apr6.131900.8407

Organization: Texas Instruments Inc

Lines: 31

American, perhaps, but nothing military about it. I learned (mostly)

slugs when we talked English units in high school physics and while

the teacher was an ex-Navy fighter jock the book certainly wasn't

produced by the military.

[Poundals were just too flinking small and made the math come out

funny; sort of the same reason proponents of SI give for using that.]

--

"Insisting on perfect safety is for people who don't have the balls to live

in the real world." -- Mary Shafer, NASA Ames Dryden

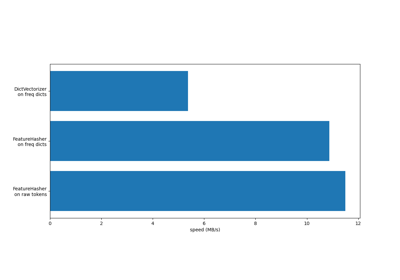

建立轉換器#

首先,我們需要一個轉換器,可以提取每個貼文的主題和本文。由於這是無狀態轉換(不需要來自訓練資料的狀態資訊),我們可以定義一個執行資料轉換的函數,然後使用FunctionTransformer來建立一個 scikit-learn 轉換器。

def subject_body_extractor(posts):

# construct object dtype array with two columns

# first column = 'subject' and second column = 'body'

features = np.empty(shape=(len(posts), 2), dtype=object)

for i, text in enumerate(posts):

# temporary variable `_` stores '\n\n'

headers, _, body = text.partition("\n\n")

# store body text in second column

features[i, 1] = body

prefix = "Subject:"

sub = ""

# save text after 'Subject:' in first column

for line in headers.split("\n"):

if line.startswith(prefix):

sub = line[len(prefix) :]

break

features[i, 0] = sub

return features

subject_body_transformer = FunctionTransformer(subject_body_extractor)

我們還將建立一個轉換器,可以提取文字的長度和句子數。

def text_stats(posts):

return [{"length": len(text), "num_sentences": text.count(".")} for text in posts]

text_stats_transformer = FunctionTransformer(text_stats)

分類流程#

下面的流程使用 SubjectBodyExtractor 從每個貼文中提取主題和本文,產生一個 (n_samples, 2) 陣列。然後,此陣列使用 ColumnTransformer 計算主題和本文的標準詞袋特徵以及本文上的文字長度和句子數。我們將它們與權重結合,然後在組合的特徵集上訓練分類器。

pipeline = Pipeline(

[

# Extract subject & body

("subjectbody", subject_body_transformer),

# Use ColumnTransformer to combine the subject and body features

(

"union",

ColumnTransformer(

[

# bag-of-words for subject (col 0)

("subject", TfidfVectorizer(min_df=50), 0),

# bag-of-words with decomposition for body (col 1)

(

"body_bow",

Pipeline(

[

("tfidf", TfidfVectorizer()),

("best", PCA(n_components=50, svd_solver="arpack")),

]

),

1,

),

# Pipeline for pulling text stats from post's body

(

"body_stats",

Pipeline(

[

(

"stats",

text_stats_transformer,

), # returns a list of dicts

(

"vect",

DictVectorizer(),

), # list of dicts -> feature matrix

]

),

1,

),

],

# weight above ColumnTransformer features

transformer_weights={

"subject": 0.8,

"body_bow": 0.5,

"body_stats": 1.0,

},

),

),

# Use a SVC classifier on the combined features

("svc", LinearSVC(dual=False)),

],

verbose=True,

)

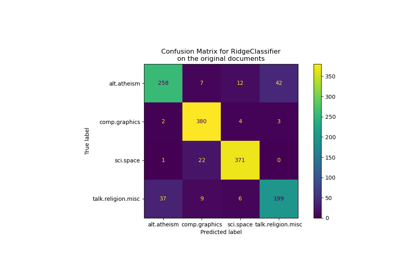

最後,我們將流程擬合到訓練資料上,並使用它來預測 X_test 的主題。然後會列印我們流程的效能指標。

pipeline.fit(X_train, y_train)

y_pred = pipeline.predict(X_test)

print("Classification report:\n\n{}".format(classification_report(y_test, y_pred)))

[Pipeline] ....... (step 1 of 3) Processing subjectbody, total= 0.0s

[Pipeline] ............. (step 2 of 3) Processing union, total= 0.4s

[Pipeline] ............... (step 3 of 3) Processing svc, total= 0.0s

Classification report:

precision recall f1-score support

0 0.84 0.87 0.86 396

1 0.87 0.84 0.85 394

accuracy 0.86 790

macro avg 0.86 0.86 0.86 790

weighted avg 0.86 0.86 0.86 790

指令碼的總執行時間:(0 分鐘 2.562 秒)

相關範例